Godel Architecture

Indroduction to inhouse built MOEs architecture powering the models.

Aditya Prasad

10/29/20213 min read

structure

Foundation model made for real-world tasks

Add-on layers that plug into the core to handle robotics, code, search, and more domain tasks[Doors]

Lightweight design that runs smoothly on local devices and adapts in real time.[Partially the core llm runs locally with only shared experts and for more complex tasks refers to Doors]

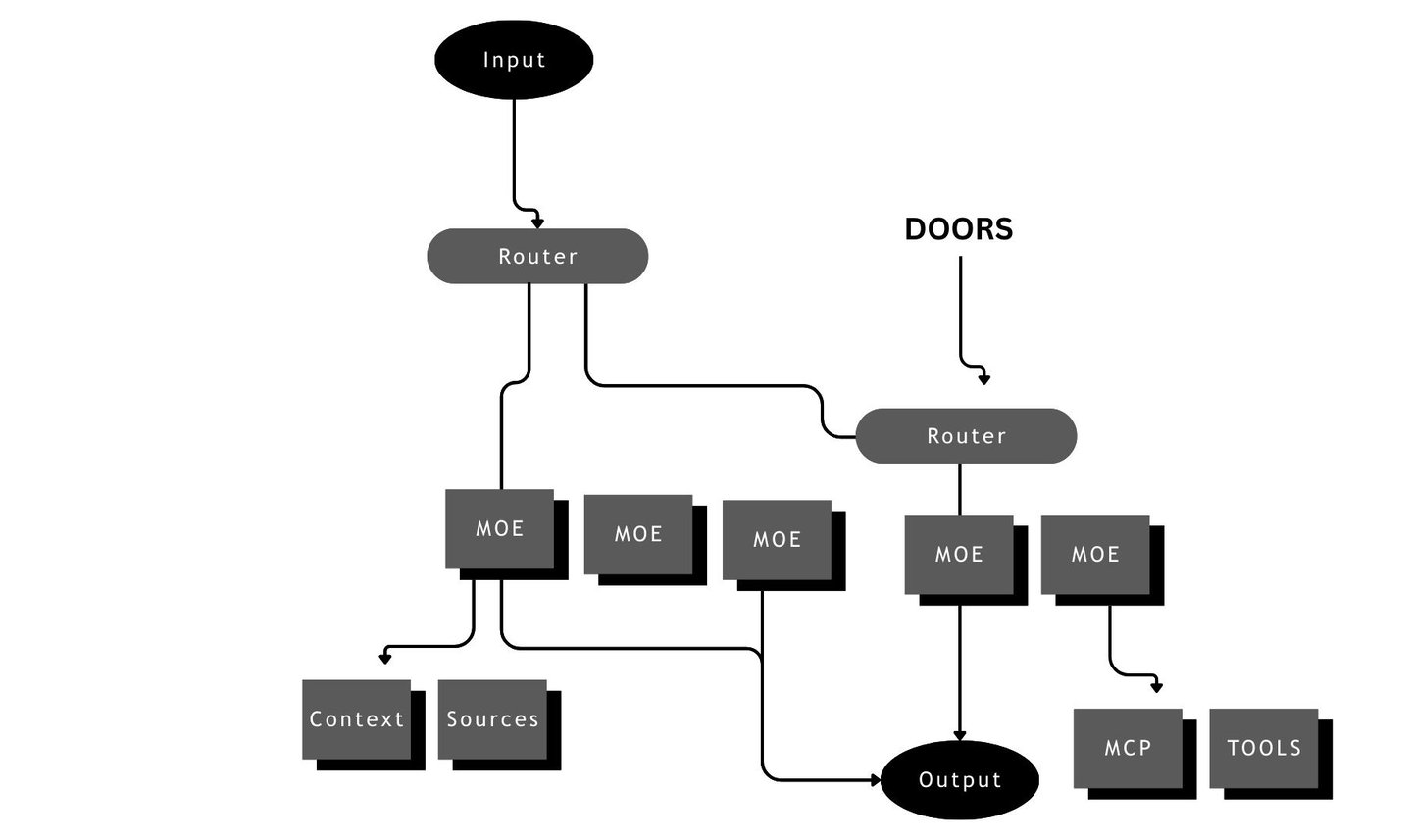

The architecture behind Gödel AI

Gödel AI is built around one simple idea: the way we design AI should match the way the real world works.

Some tasks need broad reasoning. Others need deep, specialized skills. No single model can do everything well, and making it bigger does not automatically make it smarter.

Gödel AI solves this by splitting intelligence into two layers: a fast general brain and a set of domain experts.

The core model:

The core is a compact language model that focuses on reasoning, planning, and understanding the user. Its job is not to do heavy specialist work.

A smaller core stays fast, cheap to run, and can even work on local devices.

The core uses a Mixture of Experts (MoE) design, where only a few experts activate at a time. This keeps compute efficient while still allowing the model to grow in capability.

Read more about MoE ideas:

https://arxiv.org/abs/1701.06538

https://arxiv.org/abs/2006.16668

The Doors layer

The Doors layer is where domain intelligence lives.

Each Door is a cluster of specialist models trained on focused, real-world data. Instead of trying to make one giant model good at everything, we let many smaller experts handle different jobs.

Examples of Door clusters:

software development (frontend, backend, debugging)

robotics control

medical guideline reasoning

finance and risk modeling

simulation and verification

Each Door has its own internal logic, tools, and validators. Doors return concrete, validated outputs, not just text.

The router

The router is the manager. It decides which Doors are needed for the current task. The router can be simple at first and more intelligent later.

Routing strategies may include:

rule-based decisions

classifiers trained from usage logs

reinforcement learning when enough data is available

The router receives signals from the core and picks the smallest set of specialists needed. This is how Gödel AI stays efficient even when many experts exist.

This makes the system modular. You can upgrade or replace one Door without touching the core.Your rights:

MCP servers and tool interfaces

For many tasks, text is not enough.

Gödel uses MCP (Model Context Protocol) servers to connect expert clusters to real tools: APIs, databases, code execution, simulations, robots, etc.

Some examples:

calling a build system when generating code

running robot simulations

accessing medical guidelines or documentation libraries

fetching company data safely

This is what enables Gödel AI to do things, not only talk about them.

Learn about function-calling patterns in LLMs:

https://platform.openai.com/docs/guides/function-calling

https://arxiv.org/abs/2210.03629

Verifiers and safety

Every serious domain needs checks.

Gödel integrates verifiers inside Doors to reduce hallucinations and prevent unsafe outputs.

Examples:

code: run linters, type checkers, automated tests

robotics: collision simulation and path validation

healthcare: policy and guideline consistency checks

Verifiers act like a safety net. They catch errors before the model returns an answer.

Retrieval and grounding

Some problems require facts, not guesses.

Gödel integrates retrieval systems so model decisions can be grounded in actual documents, databases, or knowledge bases.

RAG overview:

https://arxiv.org/abs/2005.11401

https://arxiv.org/abs/2112.04426

This reduces hallucinations and gives explainable, evidence-based outputs.

Efficient inference

Both the core and Doors need to run efficiently.

Modern serving stacks like TGI or vLLM allow batching, quantization, and extremely fast token generation.

Text Generation Inference (TGI):

https://github.com/huggingface/text-generation-inference

vLLM (very fast):

https://github.com/vllm-project/vllm

Quantization library bitsandbytes:

https://github.com/TimDettmers/bitsandbytes

Efficient inference allows Gödel to run both locally and in the cloud.

Hybrid deployment

Gödel AI can run in multiple configurations depending on user needs.

Local

The core model can run on-device for privacy and low latency.

Cloud

Heavy Doors run in the cloud and activate only when needed.

Hybrid

The router decides when a cloud call is worth it.

This keeps cost low without sacrificing power.

Why this architecture matters

Gödel AI avoids the trap of trying to solve everything with a single giant model.

Instead, it creates a system where reasoning, specialization, grounding, and verification all work together.

It becomes adaptable, efficient, and capable of doing real-world tasks safely.

Other models answer questions.

Gödel AI is designed to operate systems, write production code, control robots, and solve domain-specific problems.

Future intelligence, today.